Use case

Gen AI Threats

Block Exfiltration to AI Platforms Like Google Gemini, ChatGPT and Deepseek

The Problem

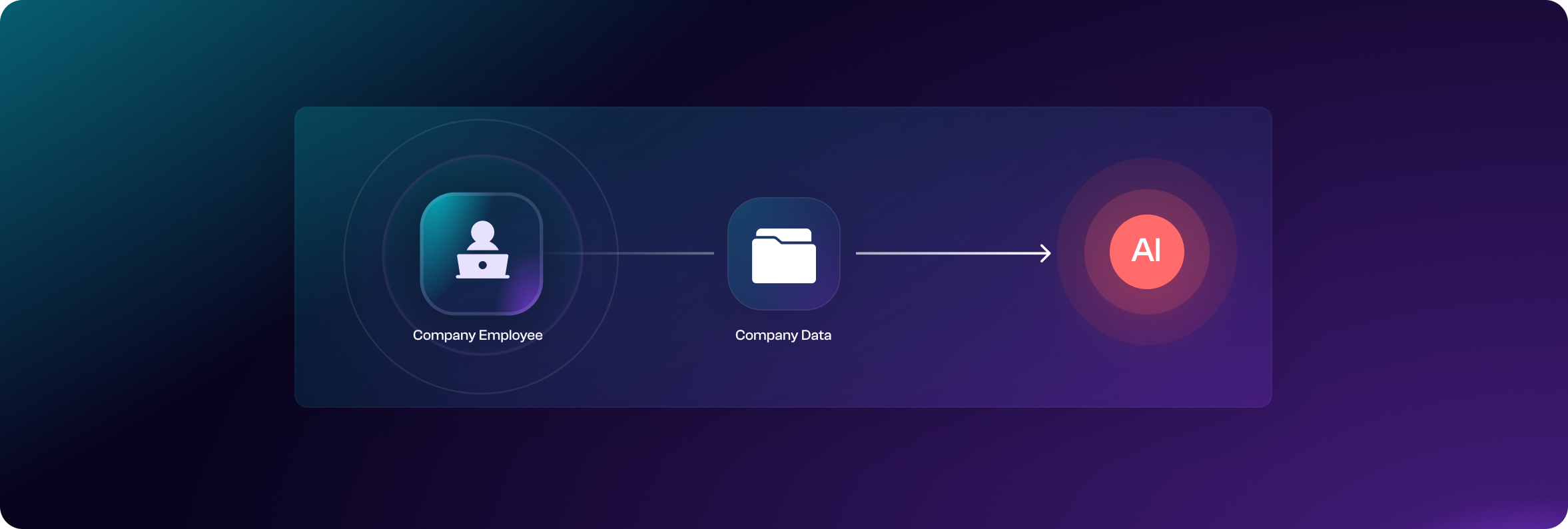

The rapid adoption of generative AI platforms—Google Gemini, ChatGPT, Deepseek, and others—presents a new frontier for data exfiltration risks. Whether users intend to quickly solve a coding challenge, summarize internal documents, or brainstorm with AI, they might copy and paste confidential or proprietary information into these systems. Despite often being well-intentioned, this can lead to unintentional leakage of sensitive data, including trade secrets, code snippets, or customer information.

Key challenges

- Inadvertent Disclosure: Employees sharing proprietary data with third-party AI services—often lacking robust privacy guarantees.

- Malicious Intent: Insiders who deliberately use AI platforms to exfiltrate valuable intellectual property.

- Lack of Visibility: Traditional security tools rarely monitor text-based exfiltration or ephemeral interactions with AI chatbots.

Security teams need holistic visibility of device usage, repeated infections, and risky installations in order to proactively secure endpoints without throttling productivity.

The Anzenna Solution

Anzenna tackles this emerging risk with a graph-based, agentless platform that monitors and correlates user activity across endpoints, cloud apps, and web sessions. By stitching together relevant events—including access to confidential files, copying content, and connecting with AI services—Anzenna enables real-time detection and proactive blocking of suspicious data transfers to platforms like Google Gemini, ChatGPT, and Deepseek.

Deep Visibility into AI Interactions

- Anzenna integrates directly with cloud and web gateways to monitor user uploads and interactions with AI platforms.

- Anzenna provides a unified view of who copies sensitive data, which application it originated from, and whether it was shared externally.

- Actionable Insights: Empowers security teams to quickly locate hotspots and apply targeted controls to reduce data leak risks.

Comprehensive Risk Scoring and Audit

- Anzenna provides a risk score for each incident, empowering security teams to prioritize critical threats.

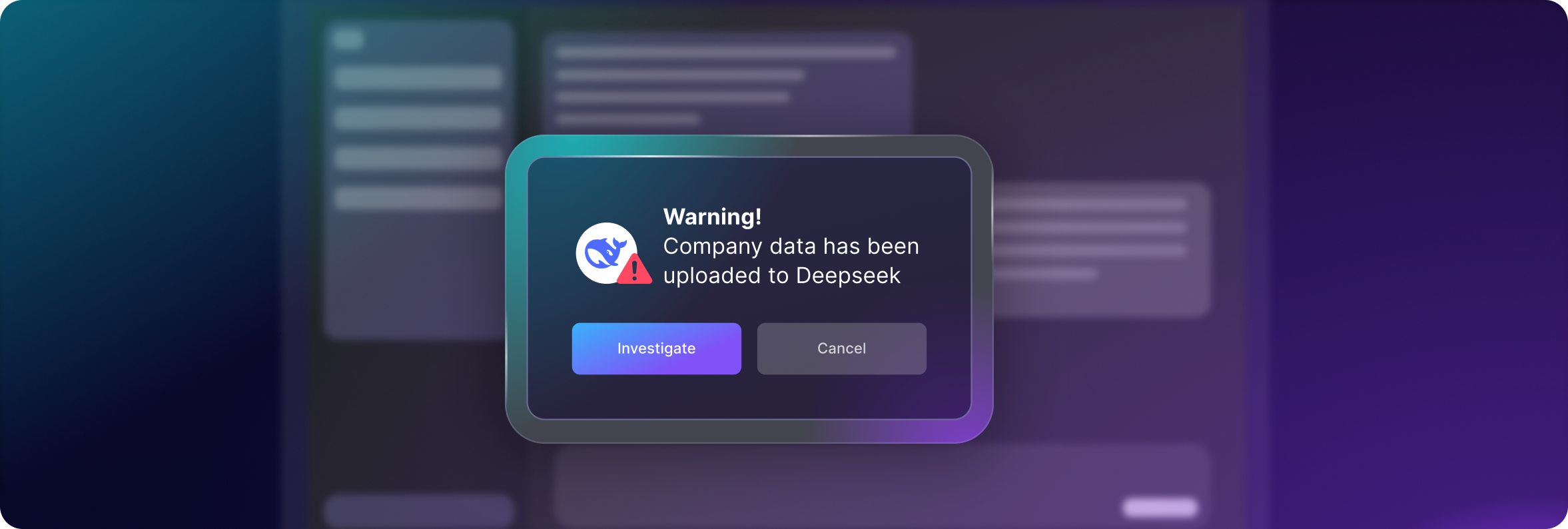

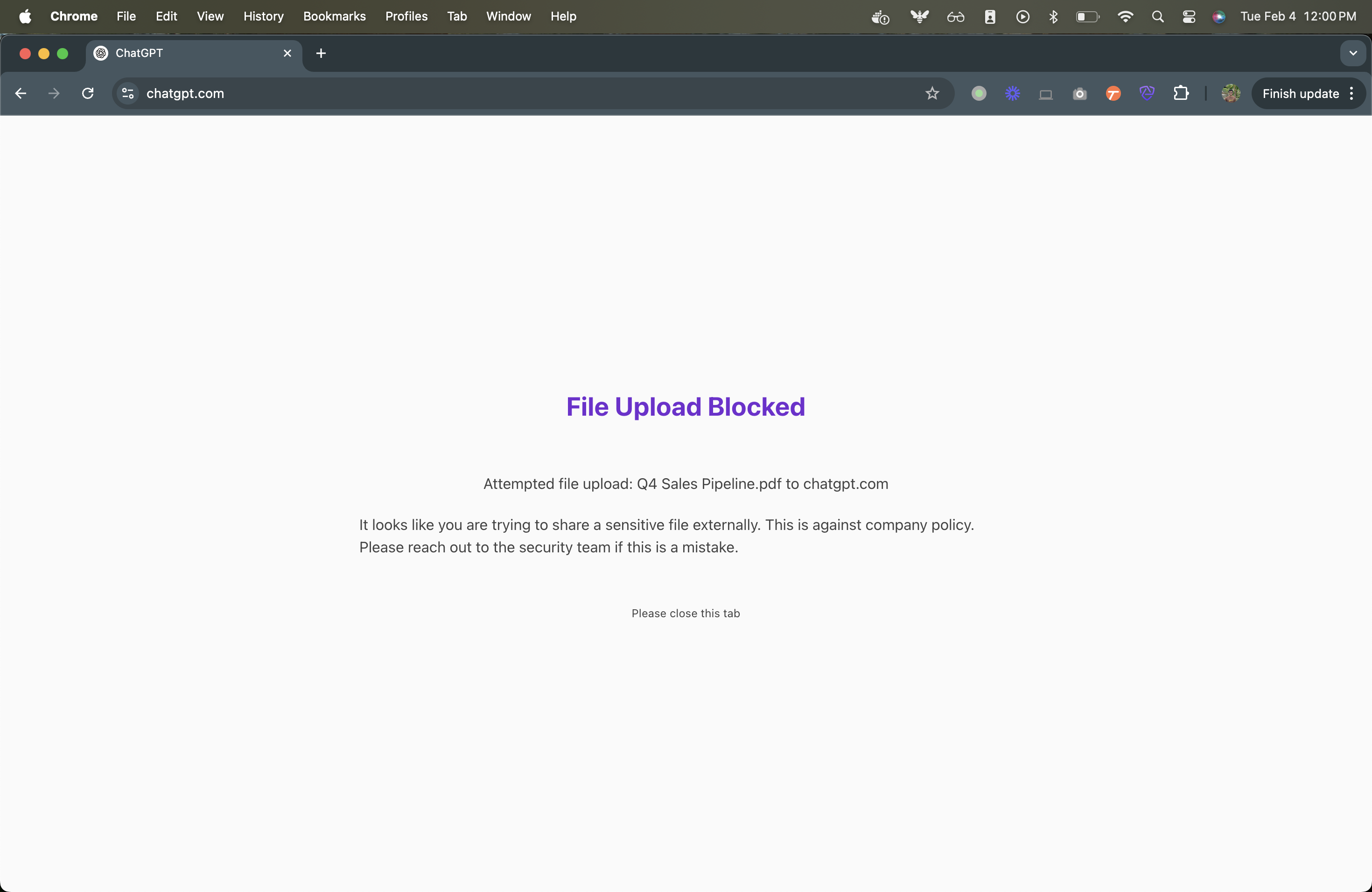

Real-Time Alerts & Blocking

- Detect attempts to paste sensitive text into AI chatbots in real time, before data is actually sent.

- Block or remediate high-risk actions automatically—minimizing the chance of accidental or malicious disclosures.

Holistic Exfiltration Control

- Whether data is shared via web browser, API connections, or native AI plugins, Anzenna’s agentless approach ensures you maintain full oversight without hindering productivity.

- Enable targeted policies to allow safe usage while preventing known toxic data transfers.

Stay ahead of the evolving threat landscape with Anzenna. Protect your organization from unintentional or malicious data leaks into AI platforms—without stifling innovation or productivity.

Other Related Use Cases

SaaS Threats

Insider Cloud Data Exfiltration